Introduction

Welcome back to ‘From the Computer to the Clinic’ - a newsletter about computational biology and its contributions to biomedical research.

In this newsletter, we explore how computational biology research can drive clinical progress. By sharing success stories in one disease area or domain of research, we aim to inspire the use of these successful approaches for other diseases and research areas also.

If you haven’t already, you can subscribe to this newsletter, or share it with friends and colleagues

Part II

If you missed part I of this series, you can check it out here.

How do you build an AI chatbot like Penny? If you’ve ever used ChatGPT, the ability to respond to very detailed and specific questions can feel magical – but under the surface this ability can be boiled down to two things: a set of mathematical equations, and a lot of data.

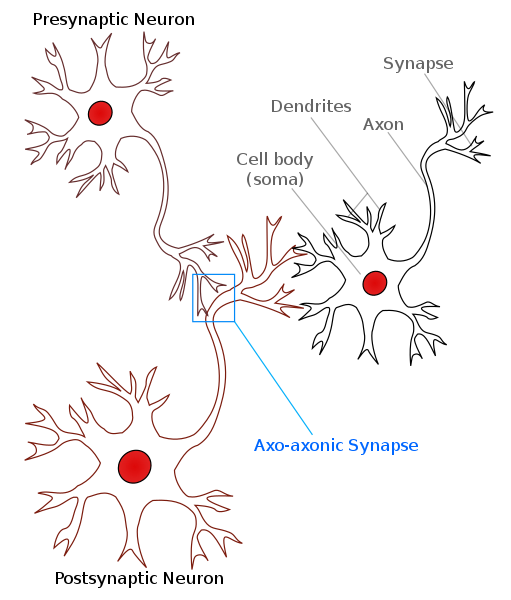

A fundamental computational concept that powers modern AI language models like ChatGPT and Penny the postpartum chatbot is the artificial neural network. The idea of an artificial neural network, as the name suggests, was inspired by the study of biological neural networks - the intricate relay system of neurons that conduct electrical impulses through the brain and the rest of the body. These neurons are ultimately responsible for all movement, memory, conscious thought, and other mental and bodily functions. The idea that nerve tissue is made up of individual cells called neurons that must act together to accomplish cognition and other mental tasks (the so-called ‘neuron theory’) was hotly debated through the start of the 20th century. Some prominent neuroscientists, proponents of the ‘reticular theory’, argued that neural tissue was a continuous mesh of biological material rather than a collection of individual cells.

Though the reticular theory was ultimately proven incorrect, the work of Camillo Golgi and others in this camp was instrumental for the field of neuroscience. In fact, Santiago Ramón y Cajal, who mapped out the microscopic structure of the brain and established the validity of the neuron theory, relied heavily on Golgi’s method for staining and studying the structure of nerves. Both men received the Nobel Prize for physiology and medicine in 1906.

By the mid-20th century, the neuron theory was widely accepted, and in the 1940s, scientists were developing mathematical theories of how neurons could work together as a network, encoding information about the environment, and learning how to interpret this information to guide bodily activity (see, for example, McCulloch & Pitts, 1943; Hebb, 1949). The theorists, drawing on biological knowledge, laid out a series of principles that they believed to govern how networks of neurons in the brain operate.

The principles of neural networks can be explained from the perspective of a single neuron. An individual neuron can receive a signal (either from an external stimulus or a neuron upstream in the network), and it can relay this information to other neurons downstream. For the individual neuron to ‘fire’ – to relay its information to another neuron in the network – the signal it receives must be above a certain threshold. As a biological example, consider the body’s response to cold. You wouldn’t want to start shivering (which expends a significant amount of the body’s energy) every time a weak gust of wind blew by - it needs to be sufficiently cold for shivering to be useful.

Source: Shivansh Dave, Wikimedia Commons, CC BY-SA 4.0 DEED

Another key idea of neural networks is the possibility of multiple inputs. Each neuron within the network can receive inputs from multiple upstream neurons at once – some of which encourage the neuron to fire, and others that act against firing. It is the sum of these inputs that determines whether the neuron fires or not. Not all of these connections are necessarily of equal strength. For the individual neuron deciding whether or not to fire, some of the upstream neurons signaling to it may be more influential (or have more weight in the decision) than others.

As an example of neural networks in action, consider a child that is learning to distinguish between different kinds of animals. Perhaps this child has both a cat and a dog in their home. When their dog walks into the room, various input signals to the child’s brain, conveyed through neurons, will help them recognize their pet properly: the sight of the dog - its shaggy fur and long floppy ears - the sound of its bark, and the distinctive ‘wet dog’ smell after it has wandered in from a walk in the rain. Each of these inputs will travel to the brain, where some deciding neuron (or group of neurons) will weigh them and tell the child that a dog is in their presence.

A very young child who has not seen much of cats and dogs might mistake the two. After all, they are both four-legged creatures with ears, fur, and a tail that typically live inside the home. Over time, however, with enough exposure to different breeds of dogs and cats in pictures or in person, under different lighting and atmospheric conditions (wet fur, dry fur, etc.), the child will learn to reliably distinguish the two. The network of neurons in their brain will be fine-tuned to recognize the key distinguishing features that make a cat a cat and a dog a dog.

The next part of this series will show how artificial neural networks have been designed to replicate the characteristics of biological neural networks - allowing computers to perform a wide variety of complicated and useful tasks.

Stay tuned for part III…